Since the start of the Coronavirus crisis, professionals around the world have been forced to shift from working in their offices to working from home. This makes getting in touch with peers and collaborating in teams considerably more challenging than in person even though screensharing and videoconferencing tools are already supporting the switch by a great deal.

Immersive technologies such as augmented reality (AR) and virtual reality (VR) solutions are unable to decrease the physical distance between people. They promise, however, to decrease the mental distance between co-workers. How? By creating an increased sense of presence compared to conventional desk-based solutions. This powerful sense of presence is a crucial foundation for meaningful interaction.

Widespread diffusion of AR/VR technology has not yet been achieved. Nearly all employees still communicate through their laptop, its integrated webcam and microphone. Nevertheless, progress is accelerating: Studies on collaborative mixed reality are being conducted in academic settings to advance knowledge on how to implement these novel technologies into everyday working life. Simultaneously, commercial solutions start appearing on the market to meet the rising demand for such solutions.

This article takes the recent review of collaborative immersive technology by Prof. Mark Billinghurst and his colleagues (Ens et al., 2019), a leading research team in this field, and enriches the academic perspective with commercial examples. The researchers defined five potential collaborative work scenarios:

- Remote expert

- Shared workspace

- Shared experience

- Telepresence

- Co-annotation

Each of the scenarios is briefly outlined, illustrated with an academic study and then connected to a similar solution that is commercially available. We hope to give you a glimpse into the future of remote work and the exciting possibilities immersive technologies can provide.

Remote Expert

The remote expert scenario describes situations where a local user needs the support of an expert who is currently not on site. Until now, one has to wait for the knowledgeable expert to arrive in person. This includes high costs – in time and money. Mixed reality can allow the local user to share the environment with a remote expert. The expert, who is also deploying immersive technology, can facilitate the understanding of the problem. In turn, the remote expert can use various methods like gesture recognition, field of view or eye gaze tracking. Those can be conveyed in real time to guide the local technician.

The most readily available method to share the local environment with a remote expert is a smartphone camera, or a camera integrated in an AR headset. This however has a downside. The remote expert is constrained to the view that the local user provides and thus cannot explore the environment freely. To mitigate this limitation, researchers are investigating the use of 360-degree cameras. This all-round perspective allows the remote expert to explore the surroundings of the local user independently and more naturally. The study finds that view independence enhances co-presence in collaboration and the available gesturing possibilities increase the understanding between users (Lee et al., 2019).

Microsoft is offering such a solution with their Microsoft Dynamics 365 Remote Assist. It is available for their own AR headset HoloLens as well as Android and iOS devices. Besides allowing two HoloLens users to collaborate remotely in the above-described way, it furthermore supports cross-platform support. This grants professionals the possibility to share their environment from a head-mounted device such as HoloLens with someone using a smartphone.

Shared workspace

The scenario shared workspace focuses on situations where collaborators can manipulate and interact with a shared virtual object. Collaborators can either be in the same location or work remotely. This is particularly relevant for teams that simultaneously discuss and work on virtual product prototypes or analyse data collaboratively.

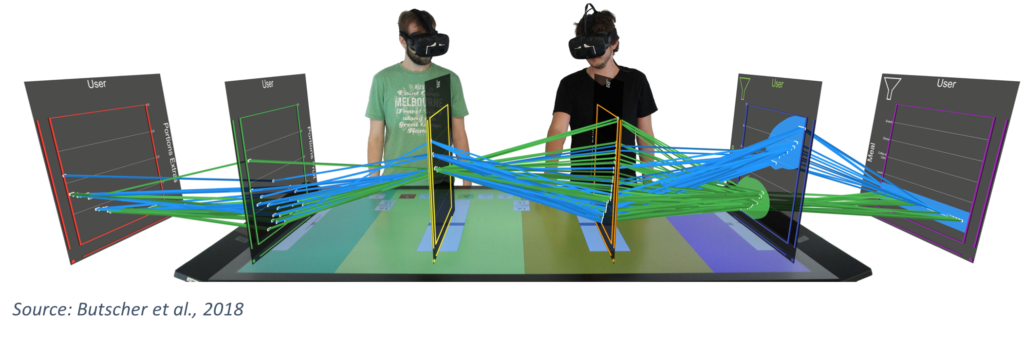

For data analysts, researchers have created ART (Augmented Reality above the Tabletop). ART is a collaborative analysis tool to visualize multidimensional data in augmented reality.

This facilitates immersion in the data, a fluid analysis process, and collaboration (Butscher et al., 2018).

In the industry, Virtualitics is an uprising data analysis tool that utilises artificial intelligence to support human decision makers. The tool allows the analysts to explore the data in immersive virtual reality environments simultaneously with their colleagues.

Shared experience

The focus of the scenario shared experience lies on the interpersonal experience rather than a specific virtual or physical object, or communication. This includes a range of settings: from collocated games, to exploring the effect of mixed reality in social interactions. Such applications could for example facilitate roleplays when practicing a business negotiation.

Researchers have investigated the effect that virtual masks can have when placed on someone’s face. Study participants could place various virtual masks on another person by pointing their smartphone camera at them. Participants raised concerns about identity and personal space after the study by having a virtual mask placed on their own face by someone else (Poretski et al., 2018).

A commercial counterpart to this are the filters developed by Snapchat that can be overlayed over one’s face. However, in this case the masks are positioned on the own face instead of someone else’s. Similarly, Apple devices allow the user to create their own avatars called Memojis which mimic the facial expressions of the user by using the TrueDepth camera system.

Telepresence

Telepresence includes setups that are highly focused on communication between two or more participants – the next evolutionary step of today’s ubiquitous videoconferencing used throughout the world to connect people.

On the academic side, the project Holoportation is an approach to enhance remote virtual collaboration and bring it closer to face-to-face communication. The system uses a new set of depth cameras to reconstruct an entire space in real-time, including people, furniture and objects in high quality. An initial qualitative study confirms that remote partners experience more seamless interactions, making it “way better than phone calls and video chats” (Orts-Escolano et al., 2016).

On the commercial side, Spatial allows users to create a realistic avatar from a single picture. This avatar is used to join a virtual environment where discussions with co-workers are made more natural. This allows teams to “sit next to each other from across the world”. Spatial has furthermore integrated various tools such as object sharing and visualisation. It is thus even an example for the scenarios shared workspace and co-annotation.

Co-annotation

Finally, co-annotation describes systems that allow users to inscribe virtual annotations on an object or environment which can be read by others. While previously described scenarios might include similar features, a particularity of such systems can be the attachment of annotations to physical or virtual objects for later (asynchronous) reading. An example is a virtual dashboard on the shopfloor that can be updated across shifts.

Scientists have created various systems that allow such annotations. One of them is using tangible markers that offer a physical interface for collaborators while also creating physical containers to allow for physical interactions. This form factor recognizes attached and detached annotations and supports asynchronous collaboration to be conducted between multiple stakeholders, both locally and remotely (Irlitti et al., 2013).

Since many of the previously described applications include co-annotation features, here is a more fun implementation of how co-annotation can work: WallaMe lets users create virtual graffiti on walls and attach it to the geo-location. Friends and other users can have a look at the virtual drawings through their smartphones and enjoy the messages send by their friends.

While the presented use cases are still in their infancy, technological maturity is on the horizon. We believe that the proliferation of immersive technology will continue and accelerate throughout the coming years, providing us with new and exciting possibilities to engage and collaborate with peers. Let us know down below how you would like to integrate AR or VR into your team and profit from the technologies’ possibilities!

References

Butscher, S., Hubenschmid, S., Müller, J., Fuchs, J., & Reiterer, H. (2018). Clusters, trends, and outliers: How Immersive technologies can facilitate the collaborative analysis of multidimensional data. Conference on Human Factors in Computing Systems – Proceedings, 2018–April, 1–12. https://doi.org/10.1145/3173574.3173664

Ens, B., Lanir, J., Tang, A., Bateman, S., Lee, G., Piumsomboon, T., & Billinghurst, M. (2019). Revisiting collaboration through mixed reality: The evolution of groupware. International Journal of Human Computer Studies, 131(May), 81–98. https://doi.org/10.1016/j.ijhcs.2019.05.011

Irlitti, A., Itzstein, S. Von, Alem, L., & Thomas, B. (2013). Tangible Interaction Techniques To Support Asynchronous Collaboration. October 2013.

Lee, G. A., Teo, T., Kim, S., & Billinghurst, M. (2019). A User Study on MR Remote Collaboration Using Live 360 Video. Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2018, 153–164. https://doi.org/10.1109/ISMAR.2018.00051

Orts-Escolano, S., Kim, D., Cai, Q., Rhemann, C., Davidson, P., Chou, P., Fanello, S., Khamis, S., Mennicken, S., Chang, W., Dou, M., Valentin, J., Kowdle, A., Tankovich, V., Pradeep, V., Degtyarev, Y., Loop, C., Wang, S., Kang, S. B., … Izadi, S. (2016). Holoportation: Virtual 3D teleportation in real-time. UIST 2016 – Proceedings of the 29th Annual Symposium on User Interface Software and Technology, 741–754. https://doi.org/10.1145/2984511.2984517

Poretski, L., Lanir, J., & Arazy, O. (2018). Normative tensions in shared augmented reality. Proceedings of the ACM on Human-Computer Interaction, 2(CSCW), 1–22. https://doi.org/10.1145/3274411

Leave a Reply